In my development workflow (DevOps and scripting, mainly – I’m a security practitioner, not a programmer) I frequently switch between Windows and WSL. I work a lot with Ansible, and I love the fact that with WSL, I can enable full Ansible linting support in Visual Studio Code. The problem is that there are known cross-OS filesystem performance issues with WSL 2. At the moment, Microsoft recommends that if you require “Performance across OS file systems“, you should use WSL 1. Yuck.

What I want to do is to have a folder on my Windows hard drive, C:\Repos, that contains all the repositories I use. I want that same folder to be available in WSL as the directory /repos. Network file shares are out of the question, because, performance. (Have you tried git operations on a CIFS share? Ugh.)

The old way – share from Windows to WSL

Until this week, I’d been sharing the Windows directory into WSL distros using /etc/fstab and automount options. Fstab contained this line:

C:/Repos /repos drvfs uid=1000,gid=1000,umask=22 0 0And /etc/wsl.conf:

[automount]

options="metadata,umask=0033"But with this setup, every so often WSL filesystem operations would grind to a halt and I’d need to terminate and restart the distro. It could be minutes or days before the problem resurfaced.

The Windows Subsystem for Linux September 2023 update, currently available only for Windows Insider builds, was supposed to fix some of the issues. I tried it. The fixes broke Docker and didn’t improve filesystem performance sufficiently. Even after a Docker upgrade (the Docker folks in collaboration with the WSL team), port mapping remained broken.

The new way – share from WSL to Windows

So let’s fix this once and for all. Maybe the WSL filesystem perfomance issues will go away one day, but I need to get on with my work today, not at some unspecified point in the future. I also don’t like running insider builds, and neither did my endpoint protection software. (That’s another story.) In the meantime, we need to move the files into WSL, where the performance issues disappear. It’s cross-OS access that causes the problems.

Now I know about \\wsl.localhost, but unfortunately this confuses some of the programs I use day-to-day, including some VS Code plugins. I really need all Windows programs to believe and act like the files are on my hard drive.

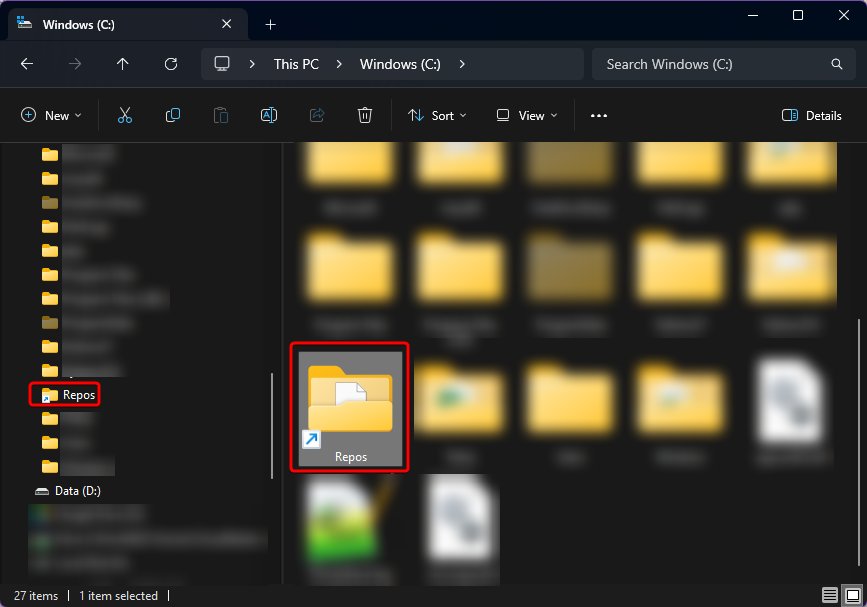

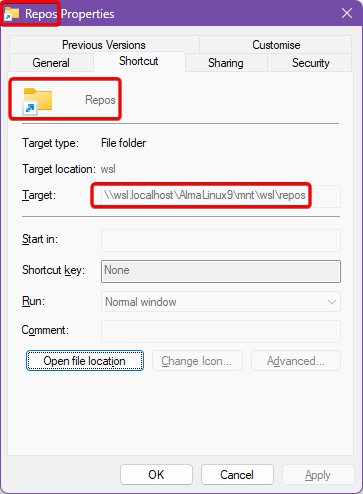

After much pulling together of information from dark, dusty corners of the internet, I discovered that, with the latest versions of Windows, you can create symbolic links to the WSL filesystem. So the files move into WSL (as /repos) and we create a symbolic link to that directory at C:\Repos. This can be as simple as uttering the following PowerShell incantation:

New-Item -ItemType SymbolicLink -Path "C:\Repos" -Target "\\wsl.localhost\AlmaLinux9\repos"

This should be fairly self-explanatory. In my case, I’m actually mapping to /mnt/wsl/repos, for reasons I’ll explain in the next section.

I have two VS Code workspaces – one for working directly in Windows, and the other for working in remote WSL mode. The Windows workspace points to C:\Repos and the WSL workspace points to /repos. When I restarted both workspaces after making these changes and moving the files into WSL, VS Code saw no difference. The files were still available, as before. But remote WSL operations now ran quicker.

Bonus: share folder with multiple distros

Ah, but what if you need the same folder to be available in more than one distribution? The same /repos folder in AlmaLinux, Oracle Linux and Ubuntu? Not network mapped? Is that even possible?

Absolutely it is. It’s possible through the expedient of mounting an additional virtual hard disk, which becomes available to all distros. This freaks me out slightly, because – what about file system locking? Deadlocks? Race conditions? Okay, calm down Rob, just exercise the discipline of only opening files within one distro at a time. You got this.

Create yourself a new VHDX file. I store mine in roughly the same place WSL stores all its VHDX files:

$DiskSize = 10GB

$DiskName = "Repos.vhdx"

$VhdxDirectory = Join-Path -Path $Env:LOCALAPPDATA -ChildPath "wsl"

if (!(Test-Path -Path $VhdxDirectory)) {

New-Item -Path $VhdxDirectory -ItemType Directory

}

$DiskPath = Join-Path -Path $VhdxDirectory -ChildPath $DiskName

New-VHD -Path $DiskPath -SizeBytes $DiskSize -Dynamic

This gives you a raw, unformatted virtual hard drive at C:\Users\rob\AppData\Local\wsl\Repos.vhdx. Mount it within WSL. From the PowerShell session used above:

wsl --mount --vhd $DiskPath --bare

Now inside one of your distros, you’ll have a new drive, ready to be formatted to ext4. Easy enough to work out which device is the new drive – sort by timestamp:

ls -1t /dev/sd* | head -n 1

You’ll get something like /dev/sdd. Initialise this disk in WSL:

sudo mkfs -t ext4 /dev/sdd

(Do check you’re working with the correct drive – don’t just copy and paste!)

Back in the PowerShell session, we unmount the bare drive and remount it. WSL will automatically detect that the disk now contains a single ext4 partition and will mount that under /mnt/wsl – in all distros, mind you.

wsl --unmount $DiskPath

wsl --mount --vhd $DiskPath --name repos

The drive will now be mounted at /mnt/wsl/repos. If necessary, move any files into this location and create a new symlink at /repos. In WSL:

shopt -s dotglob

sudo mv /repos/* /mnt/wsl/repos

sudo rmdir /repos

sudo ln -s /mnt/wsl/repos /repos

(shopt here ensures the move includes any hidden files with names begining ‘.‘.)

When sharing this directory into Windows, you need to use the full path /mnt/wsl/repos, not the symlink /repos. But otherwise it works the same as before.

This mount will not persist across reboots, so create a scheduled task to do this, that will run on log on.

I don’t understand how this solves anything. You’re just creating a symlink into a VHDX file, which resolves to \\wsl.localhost , which you claim you had problems with.

I don’t get it.

Hi Jason. The main difference is that I previously had my working files (repositories) on a Windows filesystem. I would access the files via the automagic mounts within WSL (

/mnt/c, for instance). Instead I now have them on the VHDX, formatted with a Linux filesystem (and mounted within WSL).This avoids the key cross-OS performance issue – accessing Windows files from Linux. Instead we access in the opposite direction (access Linux files from Windows).

The VHDX came in because I wanted multiple WSL distros to be able to access the same set of files (without resorting to slow network shares). As many WSL distros as you like can reach the files in the same VHDX.

The symbolic link solves an unrelated problem. Some programs, including

CMD.EXE, have limitations when working with\\wsl.localhost:But those same programs are generally perfectly happy accessing the files through a symlink. To those programs, the symlink just looks like a normal directory on the Windows filesystem.

In summary:

\\wsl.localhost: create symlinkHope this clarifies?

After running some benchmarks, I’ve noticed that while moving files into WSL and accessing them via \\wsl.localhost speeds things up on the Linux side, it slows down access from Windows apps. So, it seems we’ve just shifted the performance hit from one side to the other.

Do you also experience that?

Hi Yorai,

Yes, I think this it normal, but it’s the lesser of two evils. As great as WSL is, there’s no perfect solution because the filesystems are so fundamentally different (access/locking models, security controls, etc.).

My impression is that accessing WSL files from Windows is quicker than the reverse. And that both are quicker than using say a network share.

If I weren’t for professional reasons stuck with Windows, I’d just move to Linux or macOS. Still, life with WSL is much better than having to run separate Linux VMs. I confort myself with that thought!

Rob

Do I understand correctly that this solution solves the file access performance issues when using docker too?

So what should I do instead of mounting a folder from a ntfs-partition into the container in my docker-compose?

I want to access the files from Windows too.

Could I mount a whole drive (formated as ext4) in WSL instead of using a VHDX?

I recommend using Docker within WSL rather than from Windows. Then in your

compose.ymlyou specify the Linux path rather than a Windows path. And rundocker compose up -dfrom WSL, not from a Windows terminal.Anything that’s mounted in WSL is accessible through the

\\wsl.localhostpath in Windows. This makes it easy to access the WSL files from (e.g.) File Explorer. Bear in mind thatCMD.EXEcan’t change to that UNC path; a PowerShell terminal can though.Yes, you can mount a physical drive in WSL. Here’s one way. First get the drive’s ID from a Windows prompt. Use

wmic diskdrive list brieforGet-CimInstance -query "SELECT * from Win32_DiskDrive"from PowerShell.Then mount the drive with, e.g.:

wsl --mount \\.\PHYSICALDRIVE2 --bare. From the WSL distro, uselsblkto view the physical disk and treat it as you would in any normal Linux distro. Typically mounted drives appear under/mnt/wsl. Then from Windows you can access via\\wsl.localhost\DistroName\mnt\wsl\[disk].I don’t know if you can automate this through

/etc/fstab– I haven’t seen anything in the documentation. But you could run a scheduled task to autommount on login. Read more here.Thanks!

Tried to mount a drive (success!) and stumpled upon two things:

1. the drive to be mounted needs to be taken “offline” in Windows Disk Management.

2. the drive is mounted as “root” user inside wsl. So by default I am not able to interact with the partion from windows because of misssing rights, (for me “\\wsl.localhost\Ubuntu\mnt\wsl\PHYSICALDRIVE4p1”).

Ah interesting. I haven’t tried this. Possibly if you partition the drive within WSL, you can then mount those partitions, which should then be visible through the

\\wsl.localhost\path. I’m guessing. I’ve had no call to do this myself.